Architect

A Blueprint for Governable AI Systems

Architect is a backend AI system I designed and built to be a working implementation of the principles outlined in my research paper, “Exploring Societal Implications of Personalized, Memory-Enabled AI”. It moves beyond theoretical ethics to provide a concrete, engineered solution for creating assistants that are transparent, auditable, and accountable by design.

The Problem: AI’s Black Box Erodes Trust and Autonomy

As AI systems become more autonomous and personalized, they risk eroding user agency through opaque decision-making, scope creep in data usage, and the potential for manipulation through choice architecture. To build trustworthy AI, accountability cannot be an afterthought—it must be a core architectural component from day one.

The Solution: An Architecture of Principled Governance

Architect implements my “Auditable Governance Schematic” through a combination of conceptual rules and a robust technical backend. The entire system is built to ensure that every action, especially those involving memory, is subject to explicit, user-defined policies.

The Conceptual Model

The system’s behavior is guided by two core concepts from my research:

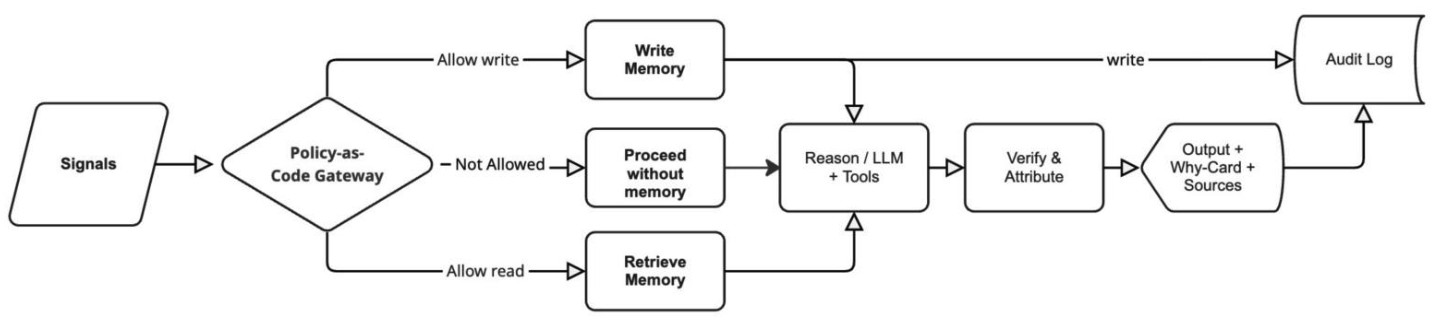

1. The “Coexistence Compact”: The system operates under a “deny-by-default” policy where memory is user-controlled, scoped, minimized, and expiring. Every action that uses memory is shipped with an explainable “Why-Card” so the user knows what was used and why.

2. Policy-as-Code Gateway: Every attempt by the AI to read or write to memory is intercepted by a gateway. This gateway evaluates the intent against scope tags, sensitivity, and purpose before allowing the action to proceed. The decision is then signed and logged.

The Technical Implementation

This governance model is implemented in a modular, layered Python backend built for clarity and testability:

- API Layer: Provides REST and WebSocket endpoints for all external interaction.

- Privacy Guard: The core technical implementation of the gateway, handling data redaction, access control, and immutable audit logging.

- Model Driver: Manages and routes requests to different language models and logs all usage for observability.

- Persistence Layer: Manages database models and migrations for storing memory and audit logs.

- CI & Testing: The system is supported by a full suite of unit and integration tests, health checks, and a CI pipeline running on GitHub Actions.

Impact & Status

Architect is more than a prototype; it is a reference implementation that proves it is possible to build powerful, personalized AI without sacrificing user control. It provides a research-backed blueprint for developers and organizations aiming to create ethically aligned intelligent systems.

The project is currently at an ~85% MVP status, with ongoing work to stabilize agent interfaces and build the second version of the evaluation harness.